Crawling is an important process in search engine optimization (SEO) that involves search engines discovering and indexing new content on the web. For anyone involved in digital marketing, understanding crawling is essential, as it significantly influences a website’s visibility and ranking in search engine results pages (SERPs). In this comprehensive guide, we’ll explore the intricacies of crawling in SEO, its importance, how to check for crawling issues, the differences between crawling and indexing, and strategies to optimize your website for better crawling. We’ll also provide tips to enhance your site’s crawl ability, complete with real-world examples and frequently asked questions.

Table of Contents

What is Crawling in SEO?

At its core, crawling in SEO refers to the process by which search engines, such as Google or Bing, systematically browse the web to find new and updated content. This is done using “crawlers” or “spiders”—automated programs that follow links from page to page, collecting data about each webpage they visit.

Once crawlers have accessed a webpage, they analyze its content, code, and structure to understand what the page is about. The information gathered during crawling is then used for indexing, another key process that allows search engines to store and organize the information to present relevant results to users during a search.

Why is Crawling Important in SEO?

Crawling is the first step in the process of ranking web pages. If your website isn’t crawled, it won’t be indexed, and as a result, it won’t appear in search engine results pages (SERPs). Ensuring your website is crawlable is critical for improving visibility and driving organic traffic.

How Do Search Engines Crawl Websites?

Search engines rely on specialized bots known as web crawlers to explore the internet. Google’s crawler, for example, is called Googlebot. Here’s how the process typically works:

- Initial Crawl: The crawler begins by fetching a list of URLs from the search engine’s own index. It often starts with popular, highly trusted websites and follows the links on these pages to discover new content.

- Following Links: As crawlers visit each page, they extract hyperlinks embedded in the content, allowing them to discover new pages to crawl. This process is recursive and continuous.

- Data Collection: Crawlers collect information on each page they visit, including the HTML structure, keywords, images, and metadata.

- Page Evaluation: Once a page is crawled, search engines evaluate its content based on various SEO factors, such as keyword relevance, page speed, and mobile-friendliness.

Frequency of Crawling

Search engines don’t crawl every page equally or constantly. Popular websites with frequently updated content (like news sites) may be crawled more often, while less active sites may be crawled less frequently. The crawl budget, or the number of pages a search engine will crawl on your site within a certain time frame, is an essential concept for large sites to consider.

Importance of Crawling in SEO

Crawling in SEO is critical because it lays the groundwork for a search engine’s ability to index and rank your site’s content. Without crawling, your site won’t appear in search results at all. But crawling goes beyond just visibility—it affects every aspect of SEO performance, from ranking positions to site traffic.

Key Benefits of Crawling In SEO:

- Indexing: The primary function of crawling is to discover pages so they can be indexed, enabling them to appear in search results.

- Ranking Potential: Search engines only rank indexed pages, so regular crawling is essential to improving your rankings.

- Fresh Content Discovery: Frequent crawling ensures search engines find your newly updated content quickly, helping to keep your website relevant in fast-moving niches.

- Identifying Technical Issues: Crawling can reveal technical SEO issues, such as broken links, slow loading times, or pages that are not optimized for mobile devices.

Common Crawling Issues and How to Fix Them

Not all websites are crawled equally, and some may experience crawling issues that affect their SEO. Let’s dive into some common problems and their solutions:

a) Blocked by Robots.txt

One of the most frequent crawling issues arises when a site’s robots.txt file unintentionally blocks crawlers from accessing certain pages or entire sections of a website. The robots.txt file tells crawlers which parts of the website they can or cannot crawl.

Solution: Regularly audit your robots.txt file to ensure that important pages are not blocked. You can use tools like Google Search Console to check for errors related to robots.txt.

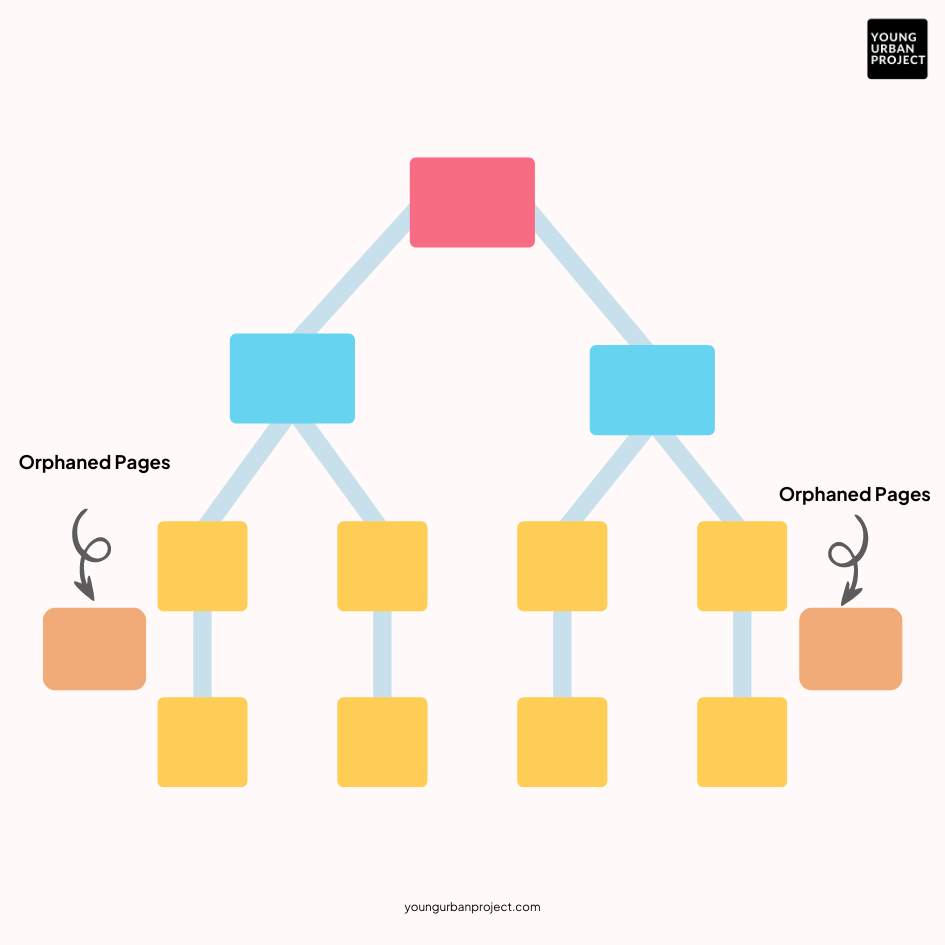

b) Orphaned Pages

Orphaned pages are pages on your website that are not linked to any other page. Since crawlers rely on links to discover pages, these orphaned pages may remain uncrawled.

Solution: Ensure that every page on your website is internally linked to other relevant pages, making it easier for crawlers to find them.

c) Broken Links and Redirect Chains

Broken links and complex redirect chains can slow down crawlers or prevent them from fully exploring your site. This can lead to important pages not being crawled and indexed.

Solution: Regularly run website audits to identify broken links and resolve them by updating the links or using appropriate redirects.

d) Poor URL Structure

Search engines prefer clean, simple URLs that clearly describe the page content. Complicated or overly long URLs can confuse crawlers, leading to missed indexing opportunities.

Solution: Optimize your website’s URL structure to be simple, descriptive, and concise. Avoid using unnecessary parameters or dynamic URLs that can lead to crawling inefficiencies.

Tools to Monitor Website Crawling

To ensure that search engines are crawling your site efficiently, you can use several tools to monitor and improve the crawling process:

a) Google Search Console

This free tool from Google allows you to monitor how well your website is being crawled and indexed. You can submit sitemaps, check for crawl errors, and see which pages are indexed.

b) Screaming Frog SEO Spider

A robust website crawler tool that helps you analyze your website from the same perspective as a search engine. It highlights crawl errors, broken links, and duplicate content issues.

c) Ahrefs Site Audit

Ahrefs’ tool provides detailed reports on crawlability issues and technical SEO problems, making it easier to fix problems before they affect your rankings.

Optimizing Your Site for Better Crawling

To make sure that search engines crawl your website efficiently, here are some best practices you can follow:

a) Create and Submit an XML Sitemap

An XML sitemap lists all the important pages on your website, helping search engines discover them. Submitting your sitemap through Google Search Console or Bing Webmaster Tools can ensure that all your pages are crawled and indexed.

b) Improve Internal Linking

Internal linking helps crawlers navigate your site more effectively. Ensure that your key pages are linked from other high-traffic pages, making them more discoverable.

c) Ensure Mobile-Friendliness

Google uses mobile-first indexing, meaning it primarily uses the mobile version of your site for crawling and indexing. Ensure your website is responsive and offers a seamless mobile experience to maximize crawling efficiency.

d) Speed Up Your Website

Search engines favor fast-loading websites. If your pages take too long to load, crawlers may abandon the site before fully exploring it. Use tools like Google PageSpeed Insights to identify speed issues and optimize your website’s performance.

e) Fix Crawl Errors Promptly

Regularly check for crawl errors in Google Search Console and fix them as soon as possible. Addressing issues such as 404 errors, server errors, and blocked resources can significantly improve crawling efficiency.

Conclusion: Mastering Crawling In SEO

Crawling in SEO is an essential process that directly affects whether your website gets indexed and ranked by search engines. By understanding how crawling works, identifying common issues, and following best practices to optimize your site, you can ensure that search engines discover and rank your content effectively. Regular monitoring through tools like Google Search Console and Screaming Frog can help you stay ahead of crawling issues and maintain your site’s visibility in search results.

With this knowledge, you’ll be well on your way to mastering the intricacies of crawling and securing better SEO performance for your website.

FAQs: What Is Crawling In SEO?

1. What is crawling in SEO?

Crawling in SEO is the process by which search engines, like Google, use automated programs known as web crawlers or spiders to discover and access web pages. These crawlers follow links across the internet, collecting data on each webpage to understand its content and structure, which helps in indexing and ranking.

2. Why is crawling important for SEO?

Crawling is essential for SEO because it is the first step in getting your website indexed by search engines. If your site isn’t crawled, it won’t be indexed, which means it won’t appear in search results, limiting your chances of attracting organic traffic.

3. How can I make sure my website gets crawled by search engines?

To ensure your website is crawled, you can:

-Submit an XML sitemap to Google Search Console.

-Improve internal linking to make pages easier for crawlers to find.

-Avoid blocking important pages with the robots.txt file.

-Fix crawl errors promptly and ensure fast page loading speeds.

4. What is the difference between indexing and crawling in SEO?

Crawling is the process of discovering new or updated pages by search engine bots, while indexing is the process of storing and organizing the information collected from crawling. Once a page is crawled, it is evaluated for inclusion in the search engine’s index, where it can appear in search results.

5. How often do search engines crawl a website?

The frequency of crawling depends on factors like the size of the website, how frequently content is updated, and the overall authority of the site. Popular or frequently updated websites may be crawled multiple times a day, while smaller or less active sites may be crawled less frequently.

6. What is a crawl budget, and how does it affect my website?

A crawl budget is the number of pages a search engine will crawl on your website within a given period. Large websites must manage their crawl budget efficiently to ensure that their most important pages get crawled regularly. Optimizing your site’s structure and internal linking can help improve crawl efficiency.

7. What are common issues related to crawling in SEO?

Common crawling issues include:

-Pages blocked by the robots.txt file.

-Orphaned pages (pages with no internal links).

-Broken links or redirect chains.

-Slow page loading times.

8. How can I check if my site is being crawled correctly?

You can use tools like Google Search Console to monitor crawling activity on your site. It provides reports on crawl errors, indexed pages, and blocked resources. You can also use Screaming Frog SEO Spider to simulate a crawl and detect issues from the search engine’s perspective.

9. Does mobile-friendliness affect crawling in SEO?

Yes, mobile-friendliness is a key factor in crawling and indexing. Google uses mobile-first indexing, meaning it prioritizes the mobile version of your website for crawling. Ensuring your website is responsive and provides a smooth mobile experience is essential for efficient crawling.

10. Can a slow website impact crawling?

Yes, if your website is slow, crawlers may not explore all of its pages efficiently. Search engines prefer fast-loading websites, and slow page speeds can lead to incomplete crawling, affecting your site’s ability to be fully indexed.