Artificial intelligence is evolving at breakneck speed. Among all these advancements, multimodal AI is quickly becoming a buzzword in 2025. But what is Multimodal AI, and why is everyone in the tech world talking about it?

Table of Contents

Imagine an AI system that can understand text, images, audio, and video simultaneously, making its understanding of context and intent much richer. That’s the promise of multimodal AI. It’s the technology behind models like Google Gemini, which can process inputs like text, images, and voice at the same time.

This blog will explore everything you need to know about multimodal AI. We’ll talk about What is Multimodal AI, define it, explain how it works, showcase its applications, and discuss emerging trends reshaping industries.

What is Multimodal AI?

At its core, multimodal AI is an advanced type of artificial intelligence that integrates and processes multiple types of data, also called “modalities.” Modalities include text, images, audio, and even video. Unlike unimodal AI systems, which focus on only one type of data (like just text or just images), multimodal AI combines different inputs to give a deeper, more integrated understanding of a task.

Example of Multimodal AI

Let’s say you’re using Google Gemini, a virtual assistant powered by multimodal AI.

Here’s what it can do—all at once:

- Read aloud an email you just received (that’s text).

- Look at an attached image and tell you what’s in it (that’s visual data).

- Reply using voice, so you don’t have to type (that’s audio).

It’s like talking to a super-smart friend who understands everything—words, pictures, and even tone of voice—at the same time.

This makes your interaction feel more natural. No switching apps. No repeating yourself. Just smooth, smart help.

Also Read: 10 Essential Skills to Build AI Agents

Multimodal AI vs. Unimodal AI

Here’s a simple comparison of unimodal and multimodal AI systems:

| Feature | Unimodal AI | Multimodal AI |

| Input Type | Single (text, image, etc.) | Multiple (text + image + audio, etc.) |

| Context Understanding | Limited | Richer and more integrated |

| Applications | Specialized tasks | Complex and diverse tasks |

Multimodal AI fills the gaps that unimodal systems leave behind, making it a natural evolution in artificial intelligence.

Also Read: Generative AI vs Predictive AI: Key Differences

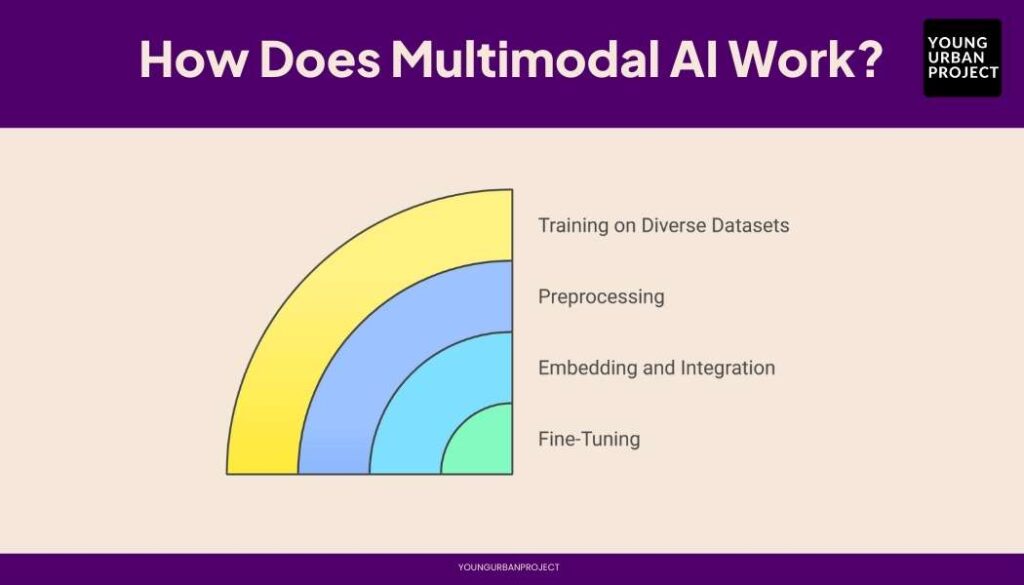

How Does Multimodal AI Work?

1. Training AI Models on Large and Diverse Datasets

AI models learn by looking at tons of examples. For multimodal AI, these examples include different types of data like pictures, text, and sounds. The more examples the AI sees, the better it gets at understanding patterns across these different types of information.

2. Transforming Raw Inputs into Machine-Readable Data

Computers can’t directly understand images or sounds like we do. First, the system changes raw data (like pictures or voice) into numbers that machines can work with. This process, called preprocessing, makes the different types of data ready for the AI to analyze.

3. Embedding and Integrating Data from Different Modalities

The AI turns each type of input (like text or images) into special number patterns called embeddings. These number patterns capture what the data means. The system then combines these patterns from different sources to understand the complete picture.

Early Fusion vs. Late Fusion

Early fusion combines different data types right at the start. Late fusion keeps data separate longer and only combines the results. It’s like mixing cake ingredients before baking (early) versus making separate parts and combining them later (late).

4. Fine-Tuning Models for Task-Specific Performance

After training on general data, the AI gets extra training on specific tasks. This helps it get better at particular jobs like medical image analysis or voice assistants. The extra training makes the AI more accurate for the exact job you need it to do.

Also Read: What is Agentic AI? A Comprehensive Guide

Key Components of a Multimodal AI System

1. Modality

A modality is just a fancy word for a type of information. Humans use different modalities all the time – we see, hear, read, and touch. In AI, each different input type (like speech or images) is a separate modality that the system needs to understand.

2. Type of data used

Multimodal systems work with many data types. Text comes from books, websites, and social media. Images include photos and diagrams. Audio contains speech and sounds. Video combines images and sound. Each type needs different handling before the AI can use it.

3. Machine learning and neural networks

Neural networks are computer systems inspired by human brains. They use layers of math to find patterns in data. In multimodal AI, specialized networks process each type of data. Some handle images, while others work with text or sound before combining results.

4. Role of large language models in multimodal learning

Language models like GPT help multimodal systems understand meaning across different inputs. They act like translators between different types of data. These models help the system connect what it sees in an image with words that describe it, making everything work together better.

Popular Multimodal AI Tools and Models

Several cutting-edge tools and models are paving the way for multimodal AI in 2025:

- GPT-4 (OpenAI): Handles text and image inputs.

- Google Gemini (Google): Combines text, images, and voice for unified workflows.

- CLIP (OpenAI): Links images and text for improved visual reasoning.

- VisualBERT (Hugging Face): Excels in integrating language and vision.

- MUM (Google): A multimodal powerhouse handling tasks like multimodal search.

Real-World Applications of Multimodal AI

1. Virtual assistants

Modern assistants like Gemini, ChatGPT, and Siri can now understand multiple types of inputs together. They process text, images, and voice at the same time. This lets you show a picture while asking a question, and the assistant understands both to give better answers.

2. Medical imaging and diagnostics

Doctors use AI that combines patient records with medical scans. This helps find diseases early by looking at both the pictures and the patient’s history. The AI can spot things in X-rays or MRIs that match with symptoms in the written notes.

3. E-commerce: Search by image or description

Online shops now let you search with pictures or words. Just snap a photo of shoes you like, and the store finds similar ones. You can also describe what you want, and the system shows matching products, making shopping faster.

4. Social media moderation

AI helps keep social media safe by checking both pictures and words in posts. It can spot harmful content by understanding that some normal-looking photos become bad when paired with certain text. This catches more rule-breaking posts than before.

5. Multimodal content creation

New AI tools can turn your written ideas into videos or pictures. Type what you want, and the AI creates matching visuals. Some systems can even take a rough sketch and voice description to make professional-looking videos or artwork.

6. Smart surveillance systems

Security cameras now use AI that watches video while listening for unusual sounds. These systems can tell the difference between normal activity and dangerous situations by combining what they see and hear, helping security teams respond faster.

Also Read: Main Goal of Generative AI

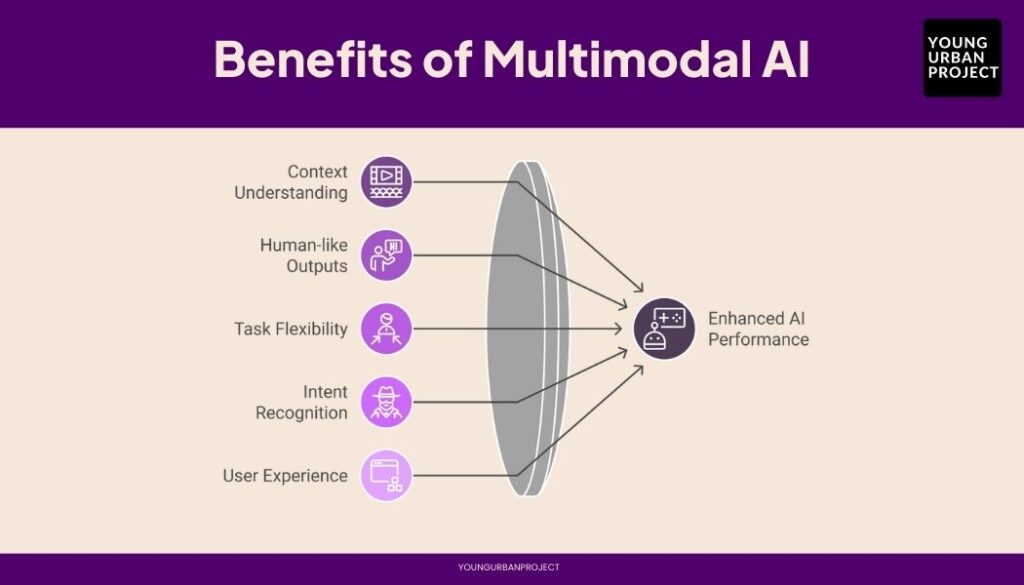

Benefits of Multimodal AI

1. Better understanding of context across inputs

The AI gets the full picture by connecting words, images, and sounds. Like how you understand a movie better with both picture and sound, these systems understand situations more completely by putting different clues together.

2. More accurate and human-like outputs

By using multiple information types, AI responses feel more natural. The systems can answer questions with the right tone and content because they understand more of what you’re asking, just like talking to a person who can see and hear you.

3. Flexibility in handling multiple types of inputs and tasks

These systems can switch between different jobs easily. The same AI can analyze pictures, answer text questions, or process voice commands without needing separate programs. This makes them useful for many different problems.

4. Enhanced user intent recognition

The AI better understands what you really want. If your words are unclear, it can look at your pictures or tone of voice to figure out your true meaning. This reduces mistakes and frustration when the system doesn’t get what you’re asking.

5. Intuitive and seamless user experiences

Using these systems feels more natural and easy. You can talk, type, or show images without changing apps. The experience feels smooth because you can communicate in whatever way makes sense at the moment, just like with a human.

Also Read: Knowledge-Based Agents in AI: The Ultimate Guide

Key Challenges in Multimodal AI

1. High-quality, large-scale data requirements

These systems need huge amounts of good examples to learn from. Finding millions of properly labeled images, texts, and sounds takes time and money. Without enough quality examples, the AI makes more mistakes and doesn’t work as well.

2. Data fusion complexity

Combining different types of information is hard. Text, images, and sound work differently and contain different kinds of details. Making these work together is like translating between languages while keeping all the meaning intact.

3. Alignment across different modalities

The AI must connect related information across types. It needs to know that the word “dog” and a picture of a dog mean the same thing. Building these connections is difficult, especially for complex or abstract concepts.

4. Cross-modal translation and understanding

Converting between different types of data is challenging. The system needs to describe images accurately in words or create images from text descriptions. Each translation loses some details, just like human language translation.

5. Ethical, privacy, and bias concerns

These powerful systems raise serious concerns. They may learn biases from their training data or invade privacy by combining different data types. As they become more capable, the risks of misuse or unintended harm also increase.

What’s Next for Multimodal AI? Future of Multimodal AI

The future of multimodal AI is incredibly promising, opening the door to a new era of advanced AI capabilities. By integrating multiple data types, such as text, images, audio, and video, multimodal AI is reshaping how we interact with and utilize technology. Here are some exciting trends to keep an eye on:

1. Expansion of multimodal large-language models:

These models are evolving to handle not just text, but also video and other mediums, enabling more seamless and versatile applications across industries like education, healthcare, and entertainment.

2. Enhanced multimodal systems for creativity:

Tools like text-to-video generators are becoming more sophisticated, empowering creators to produce engaging content more efficiently and with greater precision than ever before.

3. Real-time processing of multimodal inputs:

The ability to analyze and respond to multiple input types in real-time is driving innovation in dynamic applications, such as virtual assistants, autonomous vehicles, and interactive simulations.

As these advancements continue to progress, multimodal AI is set to revolutionize how we solve complex problems and create new opportunities across countless fields.

Frequently Asked Questions

1. Is ChatGPT a multimodal AI model?

Yes, ChatGPT is a multimodal AI model—especially in its latest version, GPT-4 Turbo. It can understand text, images, and even voice (in the mobile app). However, its multimodal features depend on the platform. Some versions remain text-only, while others support richer, more interactive experiences across multiple input types.

2. What is the difference between multimodal AI and unimodal AI?

Multimodal AI processes and understands multiple data types—such as text, images, audio, and video—simultaneously, enabling a richer and more complex analysis. In contrast, unimodal AI focuses on a single data type at a time, such as only text or only images, which limits its scope and functionality in certain applications.

3. Can multimodal AI boost business processes?

Absolutely. Multimodal AI leverages different forms of data, offering businesses a more comprehensive understanding of their operations and customer needs. By integrating various data inputs, it can optimize customer interactions with personalized recommendations, streamline workflows by automating repetitive tasks, and enhance insights through deeper data analysis, driving smarter and faster decision-making.

4. What industries benefit the most from multimodal AI?

Industries like healthcare, e-commerce, education, and media benefit the most from multimodal AI. It helps doctors combine scans with patient history, improves product searches in online stores, supports visual learning in schools, and speeds up content creation. By using different types of data together, it makes work easier, faster, and more accurate across many fields.