Generative AI has been a game-changer in the tech world, redefining how we interact with machines, create content, and solve complex problems. At the heart of this innovation is something called an LLM.

Table of Contents

💡 Did you know that some of the most groundbreaking AI technologies you see today, like ChatGPT and DALL·E, are powered by LLMs? If you’re wondering, “What is LLM in generative AI?” you’re in the right place. Let’s break it down step by step for you.

What Is an LLM in Generative AI?

LLM in Generative AI stands for Large Language Model. These are advanced AI systems trained on massive amounts of text data to understand and generate human-like language. In simple terms, LLMs are the brains behind many AI tools that can write essays, answer questions, translate languages, and even create art based on prompts.

But here’s the kicker: LLMs don’t just repeat what they’ve learned. They analyze the data, learn patterns, and use this knowledge to predict a sentence’s next word or phrase. This predictive ability is what makes them “generative.”

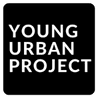

How does an LLM in Generative AI work?

1. Training Phase

The first step for LLM in generative AI is to learn the structure and nuances of human language. This happens during the training phase:

- Massive Data Consumption: LLM in Generative AI are trained on enormous datasets that include books, academic papers, websites, social media posts, and more. This provides a diverse range of language patterns, styles, and contexts.

- Identifying Patterns: The AI doesn’t just “read” text—it analyzes it for patterns. It looks at how words are typically arranged, which words tend to follow others, and how context changes meaning.

- Grammar and Context: Through training, LLMs learn grammar rules and how context influences the meaning of words. For example, the word “bank” can mean a financial institution or the side of a river, depending on the context of the sentence.

Why this matters: By training on vast data, LLMs gain the ability to mimic human language in a way that feels natural.

Also read: Main Goal of Generative AI: Benefits, Use Cases, and Limitations

2. Understanding Phase

To process text, LLM in Generative AI breaks it into smaller pieces called “tokens.”

- What are tokens? A token can be a word, a part of a word, or even a character. For example:

- The sentence “I love pizza” might be broken into tokens: [“I”, “love”, “pizza”].

- More advanced LLMs might tokenize it further into [“I”, ” lo”, “ve”, ” pizza”] to capture subtle nuances.

- Mathematical Representations: Each token is converted into a mathematical representation called an embedding. This allows the AI to understand relationships between words.

- Context Awareness: LLMs don’t look at tokens in isolation. They analyze the surrounding tokens to determine meaning. For instance, in the phrase “He went to the bank,” the word “bank” is understood as a financial institution if previous tokens indicate money, or as a riverbank if they mention water.

Why this matters: Tokens allow LLMs to process and understand text in a flexible, context-aware way.

3. Generation Phase

The final step is where the magic happens: text generation.

- Prediction at Work: LLM in Generative AI uses its training to predict the next token in a sequence. If you type “The sky is,” the AI calculates probabilities for what might come next. Words like “blue,” “cloudy,” or “clear” might have high probabilities, and it chooses the most logical one.

- Iteration: After predicting one token, the model predicts the next, and so on. This process continues until the response is complete.

- Coherence and Creativity: LLMs are trained to maintain coherence by considering the entire context of the input. They don’t just generate random words; they ensure the output makes sense.

Example:

- Input: “Once upon a time, there was a brave knight who…”

- Possible output: “…embarked on a quest to save the kingdom from a dragon.”

Why this matters: This ability to predict words based on context is what makes LLMs powerful for tasks like writing, answering questions, or even creating art from descriptions.

Neural Networks and Transformers

The backbone of LLMs is a special type of architecture called the Transformer. This is where the “neural network” part comes into play. Here’s an explanation:

- Neurons and Layers: Neural networks are made up of layers of artificial neurons. Each neuron processes information and passes it to the next layer, similar to how brain cells communicate.

- Attention Mechanism: Transformers introduced a groundbreaking feature called “attention,” which allows the model to focus on the most relevant parts of the input text. For example, when processing “The capital of France is Paris,” the model pays more attention to “capital” and “France” to generate the correct response.

- Scaling: LLMs like GPT-4 have billions of parameters (adjustable variables) that help fine-tune predictions. The more parameters, the more nuanced the understanding.

Why this matters: Transformers allow LLMs to handle complex language tasks efficiently, making them state-of-the-art.

Let’s Simplify It With a Real-Life Analogy

Think of an LLM as a person who’s read an entire library of books:

- Training: They’ve absorbed tons of information about how sentences are structured and how words are used in different contexts.

- Understanding: When you ask them something, they quickly recall patterns and relationships between words they’ve learned.

- Generation: Based on your question, they give a natural and coherent answer, as if they’re continuing a conversation.

Also read: What is Agentic AI?

Why Are LLMs Crucial in Generative AI?

Large Language Models (LLMs) play a pivotal role in generative AI, transforming how humans interact with machines and opening up new possibilities in artificial intelligence.

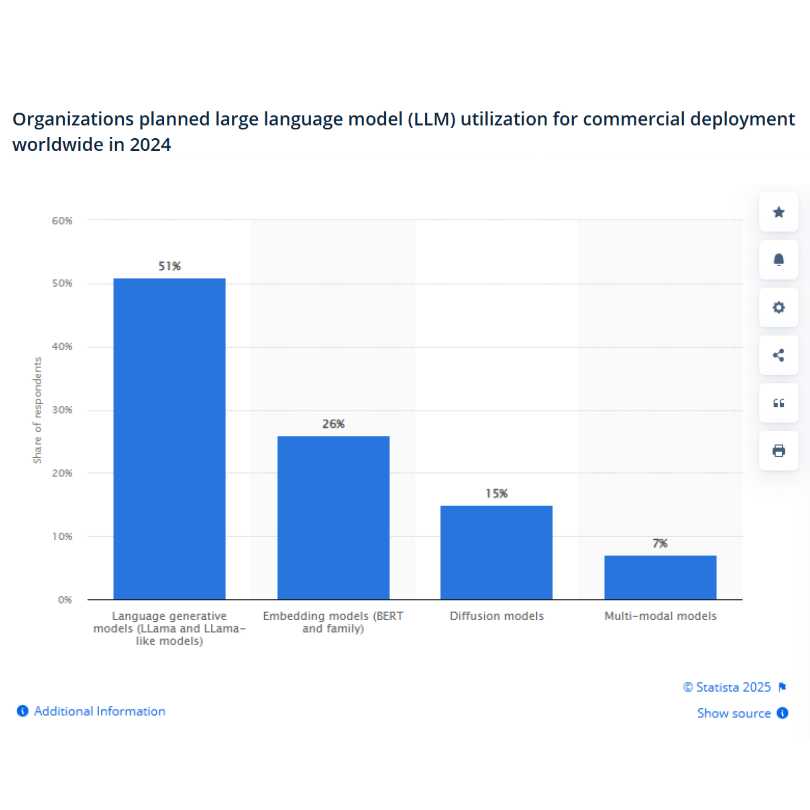

Did you know over half the global firms planned to use LLMs as of 2024? (Statista)

Here’s a detailed breakdown of why they are so crucial:

1. Ability to Adapt to a Wide Range of Tasks

LLMs are versatile, capable of handling multiple applications, including but not limited to:

- Conversational AI: Powering chatbots like ChatGPT, they can hold meaningful conversations with users on almost any topic.

- Content Generation: Creating blogs, marketing copy, stories, or poetry in natural language.

- Coding Assistance: Helping developers by writing, debugging, and optimizing code.

- Language Translation: Converting text from one language to another with accuracy and nuance.

- Summarization: Summarizing long pieces of text or documents into concise, readable content.

2. Understanding Context and Nuance

LLMs are trained to understand language in context, which allows them to:

- Interpret Ambiguity: They handle sentences where meaning depends on context, like “He saw the man with a telescope.”

- Capture Tone: They recognize and replicate different tones—formal, casual, humorous, or professional.

- Adapt to User Intent: Whether you’re asking for a joke, a serious explanation, or a creative idea, LLMs adjust their responses accordingly.

3. Generation of Creative and Coherent Content

Unlike rule-based AI, which follows predefined logic, LLMs are generative. They can:

- Create New Ideas: Generate original text that doesn’t exist in their training data, like stories or innovative solutions.

- Produce Long-Form Content: Maintain coherence and structure in extended outputs, such as essays or reports.

- Enhance Creativity: Assist artists, marketers, and writers by brainstorming ideas or refining drafts.

Example: A marketer can use an LLM to generate multiple taglines for a product launch, picking the best one.

4. Natural and Intuitive Human-Computer Interactions

LLMs excel in creating conversational interfaces that feel human-like, which is why they are widely used in:

- Customer Support: Providing 24/7 assistance via chatbots that can resolve user queries efficiently.

- Virtual Assistants: Siri, Alexa, and Google Assistant use LLMs to understand user commands and provide relevant responses.

- Education: Personalizing learning experiences by explaining concepts or answering questions in an engaging manner.

5. Efficiency and Scalability

LLMs allow businesses to scale operations with minimal human intervention. For example:

- Automating Repetitive Tasks: Writing emails, reports, or social media posts.

- Personalized Marketing: Creating tailored ads or campaigns for specific audiences.

- Knowledge Management: Summarizing large datasets or documents for quick decision-making.

6. Ability to Handle Multimodal Inputs

Some LLMs, like OpenAI’s GPT-4, can process both text and images, enabling:

- Visual Context Understanding: Analyzing and describing images alongside text.

- Enhanced Creativity: Generating content that combines text and visuals, such as infographics or captions for images.

7. Constant Learning and Improvement

- Fine-Tuning for Specific Tasks: LLMs can be further trained on domain-specific data, improving their accuracy for particular use cases (e.g., medical AI, legal tech).

- Continuous Feedback Loop: Many LLMs learn and improve from user interactions, making them smarter over time.

8. Impact Across Industries

LLMs have revolutionized various sectors, including:

- Healthcare: Assisting in diagnostics and generating patient summaries.

- Finance: Analyzing market trends, creating financial reports, and improving customer service.

- E-commerce: Personalizing shopping experiences, improving search results, and crafting product descriptions.

- Education: Offering virtual tutors, summarizing study materials, and simplifying complex topics for learners.

Also read: Generative AI vs Predictive AI: Key Differences

How Are LLMs Trained?

Large Language Models (LLMs) are trained using a multi-step process involving tons of data, computation, and optimization. Here’s an overview:

1. Step One: Collecting Massive Amounts of Data

Think of LLMs as students, and data as their textbooks. To make these models smart enough to answer a wide variety of questions, they are fed a massive amount of information.

- What kind of data? Everything from books, academic papers, and news articles to social media posts and blogs.

- How much data are we talking about? Billions and billions of words. Imagine reading every book ever written—that’s close to what LLMs go through!

Why is this important? Because the more diverse the data, the better the LLM gets at understanding context, tone, and even the nuances of human language.

2. Step Two: Cleaning and Preprocessing the Data

Raw data is messy—just like your room might be after a long day. It needs cleaning before the LLM can “study” it.

- Remove irrelevant stuff: Spam, incorrect information, or duplicate content is filtered out.

- Organize the content: The data is structured into formats that machines can understand, like sentences and tokens (more on tokens later).

- Handle biases: Efforts are made to reduce harmful biases in the training data, though this is an ongoing challenge.

This step ensures that the model doesn’t learn junk or misinformation, which could lead to inaccurate or harmful outputs.

3. Step Three: Building the Model (Where the Magic Happens)

Now, the real work begins. AI engineers create and train the LLM using advanced architectures, the most popular being Transformer models (the tech behind LLMs like ChatGPT).

- What are Transformers? Fancy neural networks designed to process and understand language efficiently. They excel at understanding relationships between words, sentences, and even entire paragraphs.

- How does the training work? The model learns to predict the next word in a sentence by analyzing patterns in the training data. For example, if the input is “The sky is,” the model might predict “blue.”

Imagine this as teaching a child to complete sentences, but on a scale so large it’s almost unimaginable.

4. Step Four: Fine-Tuning the Model

Once the LLM has been trained on general knowledge, it’s like a jack-of-all-trades but master of none. To make it really useful, it goes through fine-tuning for specific tasks.

- What is fine-tuning? It’s like sending the model to a specialized school. For example:

- To make it good at customer support, it’s trained on chat logs.

- For creative writing, it’s fed examples of poems, scripts, or novels.

- Why fine-tune? It ensures LLMs perform exceptionally well in specific scenarios instead of being just “good enough” across the board.

Fine-tuning makes LLMs versatile. Whether it’s writing marketing copy or answering technical questions, it can do it all with ease.

5. The Role of Supercomputers

Here’s the thing: Training an LLM isn’t something you can do on a regular laptop. It requires supercomputers with insane processing power.

- Why supercomputers? Training involves billions of parameters (mathematical rules the model learns), and handling this complexity takes heavy computational lifting.

- Energy and time costs: It can take weeks or even months to train an LLM, and the process consumes a significant amount of energy.

Think of it like baking a massive cake in an industrial oven—it takes time, precision, and a lot of heat (or in this case, computing power).

6. Testing and Feedback

Once trained, the LLM isn’t just released into the world immediately. It undergoes rigorous testing:

- Evaluating performance: Does it understand questions? Can it generate coherent, creative, or context-aware answers?

- User feedback: As people interact with the model, engineers continuously improve it by addressing mistakes and biases.

This process ensures that the LLM evolves and improves over time, becoming smarter with every interaction.

Also read: Rational Agents in AI: Working, Types and Examples

What are the challenges of using LLMs in Generative AI?

When we talk about using Large Language Models (LLMs) in generative AI, there are a few challenges:

- Data Bias: LLMs learn from huge amounts of data, but if that data has biases (like stereotypes or wrong information), the AI might end up repeating those biases in its responses.

- Understanding vs. Mimicking: These models don’t “understand” the content the way humans do. They just predict the next word based on patterns they’ve seen, which means they can sometimes give responses that sound good but don’t make much sense or have mistakes.

- Huge Resource Requirements: Training and running LLMs require lots of computing power, which can be expensive and hard to scale, especially for smaller companies or individuals.

- Lack of Common Sense: LLMs often lack basic common sense and reasoning. They can generate text that sounds logical but might not actually make sense when you think about it more deeply.

- Ethical Concerns: There’s the risk of LLMs being used to create misleading or harmful content (like fake news or deepfakes). Ensuring responsible use is tricky.

- Data Privacy: Since LLMs learn from vast amounts of data, ensuring that private or sensitive information isn’t accidentally included or leaked is an ongoing concern.

Also read: Knowledge-Based Agents in AI

The Future of LLMs

As of January 2025, Large Language Models (LLMs) are making big strides. Here are five key trends shaping their future:

1. Efficient, Cost-Effective Models

Startups like DeepSeek are building powerful LLMs for a fraction of the cost of bigger companies. DeepSeek’s model, DeepSeek-R1, was made in just two months with less than $6 million. In comparison, OpenAI spends around $5 billion a year. This shows that AI development is becoming cheaper and more efficient.

2. Multimodal Capabilities

LLMs are getting better at handling different types of data, like text, images, and sound. Imagine you’re using a voice assistant like Siri or Alexa to plan your day. You say, “What’s the weather like today?” The assistant responds with a text-based answer and also shows you an image of the weather forecast for your location. Then, you ask, “Can you play a playlist for a rainy day?” The assistant not only understands your request for music but also recognizes the mood from your spoken words, picking songs that match a cozy, rainy day vibe.

In this scenario, the voice assistant is using multimodal capabilities—it processes your voice (audio), understands the context (text), and presents visual elements (weather forecast image) to give you a richer, more interactive experience.

3. Specialized LLMs for Different Jobs

More LLMs are being made for specific industries like law and healthcare. These specialized models help professionals by taking care of routine tasks so that they can focus on more important work. For example, in law, LLMs are handling basic tasks, letting lawyers focus on tougher cases.

4. Improving Ethical AI and Fact-Checking

LLMs are being updated to reduce bias and stop spreading false information. They are now being designed with better fact-checking tools and ethical rules to make the content they produce more reliable. Future versions may also use less energy while still being very efficient, creating models that are faster and less demanding.

5. AI Agents in the Workplace

AI agents powered by LLMs are starting to help businesses by managing complex tasks, just like how spreadsheets changed financial work. These agents can break down big goals into smaller tasks, improving productivity and decision-making. Experts believe these AI agents will soon become common in workplaces to handle tasks more efficiently.

FAQs: LLMs in Generative AI

1. How is Generative AI different from LLMs?

Generative AI refers to systems that create new content like text, images, or music. LLMs, a subset of generative AI, specialize in generating human-like text based on training data. While generative AI encompasses a broader range of media types, LLMs focus on natural language processing tasks such as answering questions, summarizing, and translation.

2. What is the working mechanism of LLMs?

LLMs operate using transformer architectures, leveraging self-attention mechanisms to analyze context within text. They predict the next word or fill in missing words by understanding relationships and patterns in large datasets. During training, LLMs optimize their predictions through iterative adjustments using loss functions and backpropagation techniques.

3. What are some widely used LLMs?

Popular LLMs include OpenAI’s GPT series (e.g., ChatGPT), Google’s BERT and PaLM, Meta’s LLaMA, and Anthropic’s Claude. These models are designed for various applications, from chatbots and translation tools to content generation and summarization, showcasing the diversity of modern LLMs.

4. What are the main limitations of LLMs?

LLMs face challenges like producing inaccurate or biased outputs, requiring extensive computational resources, and lacking true understanding or reasoning. Additionally, their reliance on training data means they may fail to address niche or real-time queries effectively, limiting their applicability in dynamic scenarios.

5. How can I enhance the quality of outputs from LLMs?

To improve output quality, provide clear, specific, and context-rich prompts. Experiment with rephrasing queries and include examples when possible. Additionally, setting parameters like temperature or length appropriately can fine-tune the model’s responses to match the desired tone and depth.

6. What ethical issues arise with LLMs?

Key ethical concerns include potential misuse for spreading misinformation, perpetuating biases in training data, and threats to privacy. Transparent usage, regular audits, and adherence to ethical guidelines are essential to mitigate these risks and ensure responsible deployment of LLMs.